Ground Texture Localization and SLAM

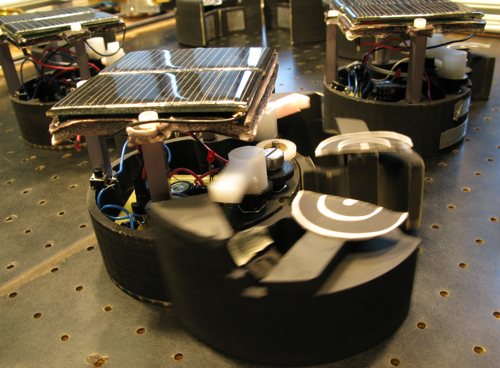

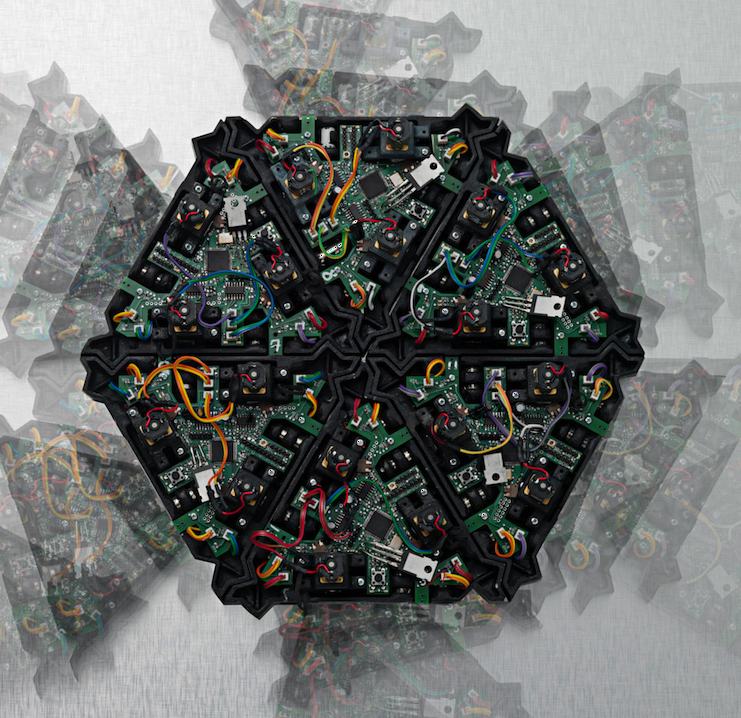

Reliable simultaneous localization and mapping (SLAM) in novel environments is essential for safe and effective navigation of mobile robots, enabling applications such as autonomous delivery, hospitality, and inspection. However, localization using LiDAR or outward-facing cameras can struggle in dynamic environments due to occlusions from moving objects and lighting variations. To overcome these limitations, ground texture localization uses a downward-facing camera to achieve high-precision localization based on the ground’s visual appearance. For this project, we are researching novel techniques that exploit the inherent geometric and lighting constraints of ground texture SLAM to increase the accuracy, speed, and robustness of these algorithms. By incorporating these constraints, we aim to achieve more precise and reliable localization for autonomous ground robots operating in challenging, unstructured environments, enabling robust navigation even in visually degraded conditions.